There are a couple of things to consider when dealing with large file uploads and ASP.NET Web API. First of all, there are typical issues related to hosting within IIS context, and the limitations imposed on the service by default by the ASP.NET framework.

Secondly, there is the usual problem of having to deal with out of memory issues when allowing external parties or users to upload buffered large files. As a consequence, streamed uploads or downloads can greatly improve the scalability of the solutions by eliminating the need for large memory overheads on buffers.

Let’s have a look at how to tackle these issues.

1. Configuring IIS 🔗

If you are using Web Hosting within IIS context, you have to deal with maxAllowedContentLength and/or maxRequestLength - depending on the IIS version you are using.

Unless explicitly modified in web.config, the default maximum IIS 7 upload filesize is 30 000 000 bytes (28.6MB). If you try to upload a larger file, the response will be 404.13 error.

If you use IIS 7+, you can modify the maxAllowedContentLength setting, in the example below to 2GB:

<system.webServer>

<security>

<requestFiltering>

<requestLimits maxAllowedContentLength="2147483648" />

</requestFiltering>

</security>

</system.webServer>

2. ASP.NET 🔗

Additionally, ASP.NET runtime has its own file size limit (which was also used by IIS 6, so if you use that version, that’s the only place you need to modify) - located under the httpRuntime element of the web.config.

By default it’s set to 4MB (4096kB). You need to change that as well (it’s set in kB not in bytes as the previous one!):

<system.web>

<httpRuntime maxRequestLength="2097152" />

</system.web>

It’s worth remembering, that out of the two configuration elements, whichever request length limit is smaller, will take precedence.

3. Out of memory through buffering 🔗

Web API, by default buffers the entire request input stream in memory. Since we just increased the file upload limit to 2GB, you can easily imagine how this could cause OOM really quickly - i.e. through a couple of simultaneous uploads going on.

Fortunately there is an easy way to force Web API into streamed mode of dealing with the uploaded files, rather than buffering the entire request input stream in memory.

We were given control over this through this commit (yes, it’s part of Web API RTM) and Kiran Challa from MSFT had a very nice short post about how to use that. Basically, we need to replace the default IHostBufferPolicySelector with our custom implementation.

public interface IHostBufferPolicySelector

{

bool UseBufferedInputStream(object hostContext);

bool UseBufferedOutputStream(HttpResponseMessage response);

}

That’s the service that makes a decision whether a given request will be buffered or not - on a per request basis. We could either implement the interface directly, or modify the only existing implementation - System.Web.Http.WebHost.WebHostBufferPolicySelector. Here’s an example:

public class NoBufferPolicySelector : WebHostBufferPolicySelector

{

public override bool UseBufferedInputStream(object hostContext)

{

var context = hostContext as HttpContextBase;

if (context != null)

{

if (string.Equals(context.Request.RequestContext.RouteData.Values["controller"].ToString(), "uploading", StringComparison.InvariantCultureIgnoreCase))

return false;

}

return true;

}

public override bool UseBufferedOutputStream(HttpResponseMessage response)

{

return base.UseBufferedOutputStream(response);

}

}

In the case above, we check if the request is routed to the UploadingController, and if so, we will not buffer the request. In all other cases, the requests remain buffered.

You then need to register the new service in GlobalConfiguration (note - this type of service needs to be global, and the setting will not work when set in the per-controller configuration!):

GlobalConfiguration.Configuration.Services.Replace(typeof(IHostBufferPolicySelector), new NoBufferPolicySelector());

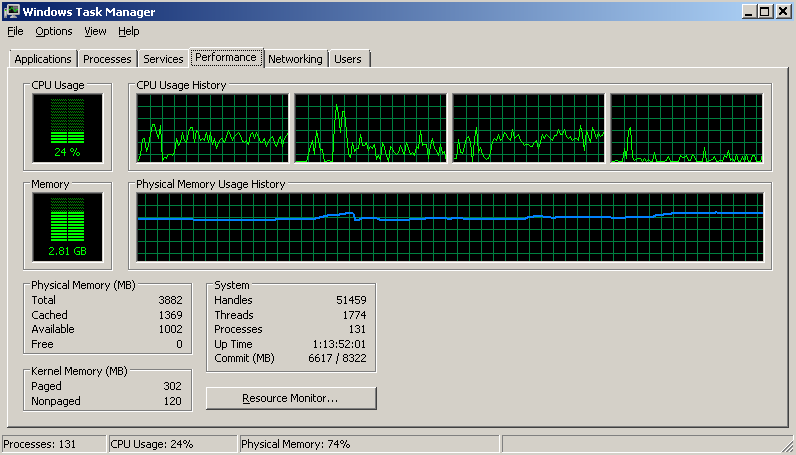

I will not go into details about how to upload files to Web API - this was already covered in one of my previous posts. Instead, here is a simple example of how resource management on your server improves when we switch off input stream buffering. Below is memory consumption when uploading a 400MB file on my local machine, with default Web API settings:

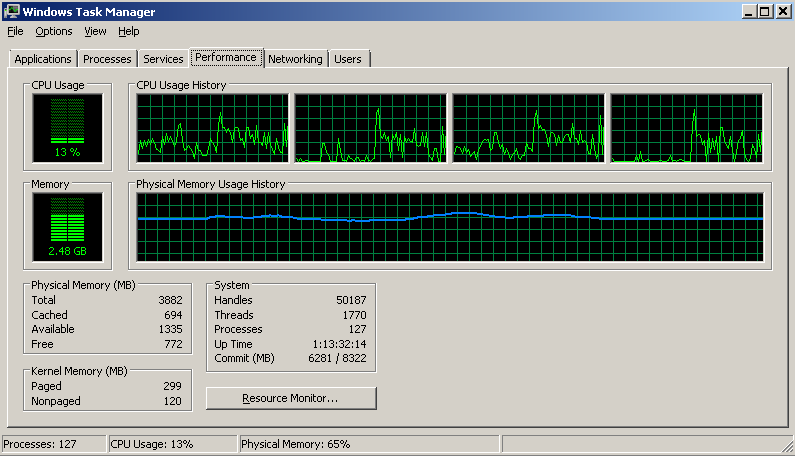

and here, after replacing the default IHostBufferPolicySelector:

So what happens under the hood? Well, in the Web API pipeline HttpControllerHandler makes the decision whether to use buffering of the request input stream or not by inspecting the lazily loaded instance of policy selector (a.k.a. our IHostBufferPolicySelector). If the request should not be buffered (like ours), it uses the good old HttpRequest.GetBufferlessInputStream from the System.Web namespace. As a result the underlying Stream object is returned immediately and we can begin processing the request body before its complete contents have been received.

Unfortunately, while it seems very straightforward, it’s not all rainbows and unicrons. As you have probably already noticed, all this, is very heavily relying on System.Web making it unusable outside of the hosting context of ASP.NET. As a result, the beautiful symmetry of Web API in self- and web-host scenarios is broken here.

5. Self host 🔗

If you are trying to achieve any of the above in self hosting, you are forced to use a workaround - and that is setting your self host’s configuration to:

//requests only

selfHostConf.TransferMode = TransferMode.StreamedRequest;

//responses only

selfHostConf.TransferMode = TransferMode.StreamedResponse;

//both

selfHostConf.TransferMode = TransferMode.Streamed;

TransferMode is a concept from WCF, and comes in four flavors as shown in the code snippet above - Buffered (default), streamed, streamed request and streamed response.

What’s worth noting, is that while for HTTP transports like Web API, there are not bigger repercussions in switching to Streamed mode, for the TCP services Streamed mode also changes the the native channel shape from IDuplexSessionChannel (used in buffered mode) to IRequestChannel and IReplyChannel (but that’s beyond the scope of our Web API discussion here).

Anyway, the downside of this solution is that TransferMode is a global setting for all requests to your self hosted service and you can’t decide on a per request basis whether to buffer or not (like we did in the example before). A workaround would be to set up two parallel self-host instances, one used for general purposes, the other for upload only, but again, that’s far from perfect and in many cases not even acceptable at all.

6. Using HttpClient 🔗

Finally, a short note on buffering on the client side - i.e. reading a file asynchronously. By default, HttpClient in version 4 of the .NET framework will buffer the body so if you want to have control over whether the response is streamed or buffered (and prevent your client from OOM-ing when dealing with large files), you’d be better off using HttWebRequest directly instead, as there you have granular control through HttpWebRequest.AllowReadStreamBuffering and HttpWebRequest.AllowWriteStreamBuffering properties. As a matter of fact, HttWebRequest lies under HttpClient, but these properties are not exposed to the consumer of HttpClient.

In .NET 4.5 HttpClient does not buffer the response by default, so there is no issue.

Summary 🔗

Dealing with large files in Web API and - for that matter - in any HTTP scenario, can be a bit troublesome and sometimes even lead to disastrous service outages, but if you know what you are doing there is no reason to be stressed about anything.

On the other hand, request/response stream buffering is one of those areas where Web API still drags a baggage of System.Web with it in the web host scenarios, and therefore you need to be careful - as not everything you can do in web host, can be achieved in self host.