In my recent posts, I’ve been exploring various facets of the Azure OpenAI Service, discussing how it can power up our applications with AI. Today, I’m taking a slightly different angle - I want to dive into how we can enhance our projects further by integrating Azure OpenAI Service with Azure AI Speech. Let’s explore what this integration means and how it could lead to exciting, AI-powered applications.

General concept 🔗

Speech synthesis serves as an ideal complement to the generative AI capabilities provided by GPT models within the Azure OpenAI Service. It’s easy to envision the creation of interactive, assistant-type applications that not only generate content in natural language but also accompany it with spoken audio.

At the same time, it’s incredibly easy to piece these things together. Let’s do that today, but before we begin we need to make sure that the following Azure prerequisites are available:

- Azure OpenAI Service; this is a gated service but I already blogged about how to get access to it. We’ll need a GPT-series model deployment there, and the authentication key to be able to call it

- Azure Speech AI; which will allow us to synthesize spoken audio. We’ll need the accounts’ region and its authentication key at hand

Sample application 🔗

Several of the AI-related demos I have written about on this blog hovered around using ArXiv API to fetch scientific articles, and then doing something interesting with them. We are going to stay in that space again today, which in turn allows us to reuse the article fetching (ArxivHelper) code that we defined in earlier blog posts.

In today’s demo we will build a console application, which will fetch quantum computing-related scientific papers from ArXiv, summarize them, and then use Azure AI Speech speech synthesis to read out those summaries to us - providing us with a de facto private tutor or private podcast (depending on how you choose to think about it).

In order to achieve these objectives, our application will need to reference three NuGet packages:

- Azure.AI.OpenAI, the Azure OpenAI SDK, to streamline the communication with the service

- Microsoft.CognitiveServices.Speech, the Azure AI Speech SDK. The package name actually reflects the original branding of the service, even though the new official name is “AI Speech”

- Spectre.Console, our favorite NuGet package for rich output to the console. This is technically not necessary, but since our UI is the console, we’ll want to make it a bit richer than the default output.

The references in the csproj file would look as follows (the version numbers correspond to the latest available ones at the time of writing this blog post):

<ItemGroup>

<PackageReference Include="Azure.AI.OpenAI" Version="1.0.0-beta.14" />

<PackageReference Include="Microsoft.CognitiveServices.Speech" Version="1.36.0" />

<PackageReference Include="Spectre.Console" Version="0.48.0" />

</ItemGroup>

Within the application code, the first thing to do is to make sure we have a mechanism to pass in the required date, which we can use to fetch all “quantum computing” articles from that day. If the daily feed of papers fails to load, we will exit the application, as there is no point in continuing further. This can be done using CLI arguments.

var date = args.Length == 1 ? args[0] : DateTime.UtcNow.ToString("yyyyMMdd");

var feed = await ArxivHelper.FetchArticles("ti:\"quantum computing\"", date);

if (feed == null)

{

Console.WriteLine("Failed to load the feed.");

return;

}

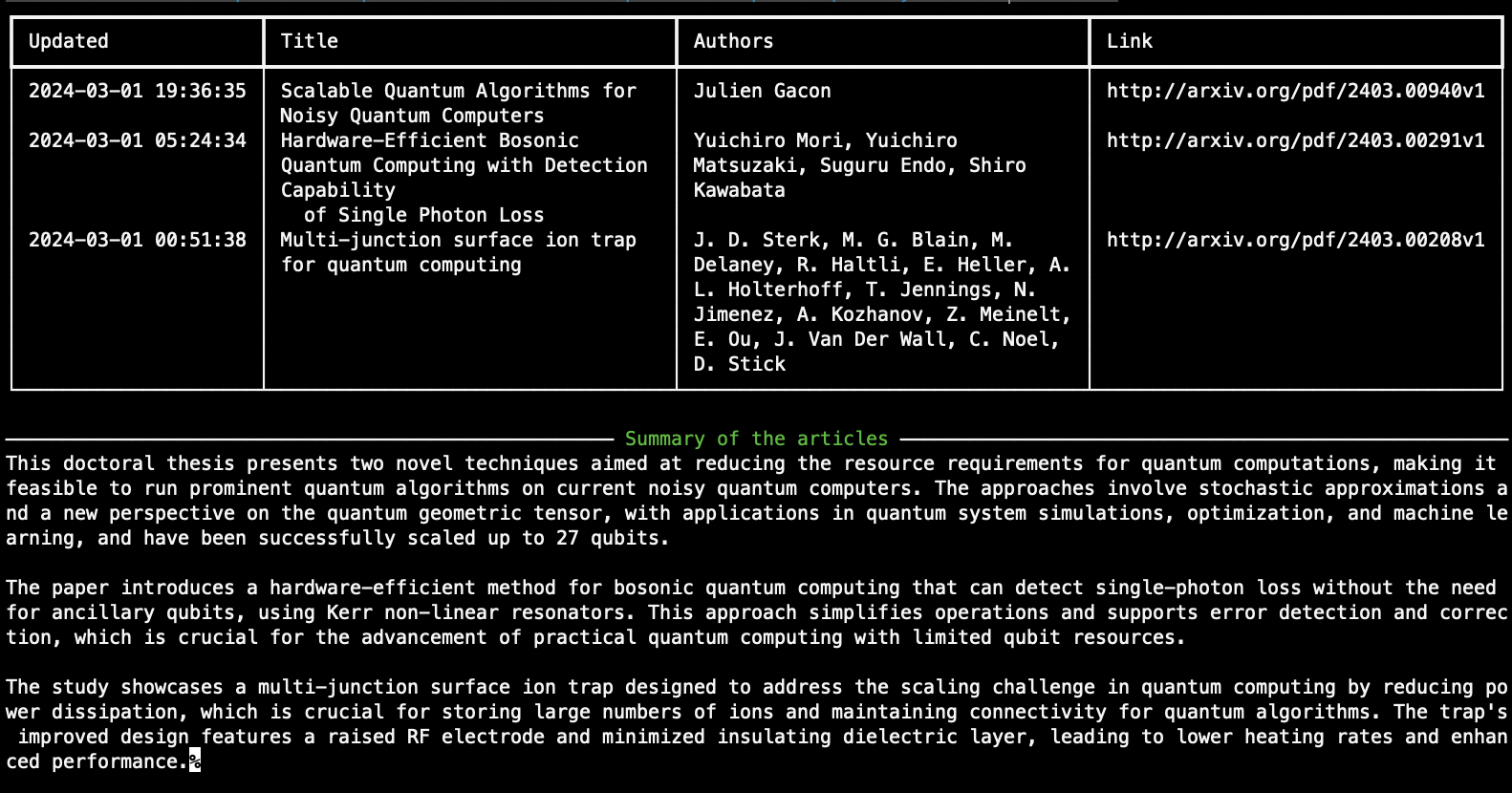

Next, we are going to write out the matching documents into the console, along with their metadata in a nice tabular format. The TryWriteOutItems method relies on Spectre.Console.

if (TryWriteOutItems(feed))

{

Console.WriteLine();

// enhance with Azure OpenAI in the next step

}

bool TryWriteOutItems(Feed feed)

{

if (feed.Entries.Count == 0)

{

Console.WriteLine("No items today...");

return false;

}

var table = new Table

{

Border = TableBorder.HeavyHead

};

table.AddColumn("Updated");

table.AddColumn("Title");

table.AddColumn("Authors");

table.AddColumn("Link");

foreach (var entry in feed.Entries)

{

table.AddRow(

$"{Markup.Escape(entry.Updated.ToString("yyyy-MM-dd HH:mm:ss"))}",

$"{Markup.Escape(entry.Title)}",

$"{Markup.Escape(string.Join(", ", entry.Authors.Select(x => x.Name).ToArray()))}",

$"[link={entry.PdfLink}]{entry.PdfLink}[/]"

);

}

AnsiConsole.Write(table);

return true;

}

As soon as we have the documents listed, we can proceed to the next step, which is to ask Azure OpenAI to summarize each of them. We will then render the summary below the table, and as it gets rendered, we will call the Azure AI Speech API to get the summarization text synthesized and spoken out to us.

In order to feed the articles to the GPT model, we will convert them into the following concatenated format.

Title: <title1>

Abstract: <abstract1>

Title: <title2>

Abstract: <abstract2>

...

Such structure will make it easy for the model to reason about the content and be able to summarize the articles for us.

if (TryWriteOutItems(feed))

{

Console.WriteLine();

var inputEntries = feed.Entries.Select(e => $"Title: {e.Title}{Environment.NewLine}Abstract: {e.Summary}");

await EnhanceWithOpenAi(string.Join(Environment.NewLine, inputEntries));

}

The EnhanceWithOpenAi method will first load all the necessary keys and configuration settings to be able to bootstrap both the OpenAI and the AI Speech clients. This is done via environment variables, which will provide the Azure OpenAI key, endpoint and deployment name, as well as the Speech key and region (the URL there is actually fixed based on the region and routing to a specific customer account happens via key only).

var azureOpenAiServiceEndpoint = Environment.GetEnvironmentVariable("AZURE_OPENAI_SERVICE_ENDPOINT") ??

throw new ArgumentException("AZURE_OPENAI_SERVICE_ENDPOINT is mandatory");

var azureOpenAiServiceKey = Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY") ??

throw new ArgumentException("AZURE_OPENAI_API_KEY is mandatory");

var azureOpenAiDeploymentName = Environment.GetEnvironmentVariable("AZURE_OPENAI_DEPLOYMENT_NAME") ??

throw new ArgumentException("AZURE_OPENAI_DEPLOYMENT_NAME is mandatory");

var speechKey = Environment.GetEnvironmentVariable("AZURE_SPEECH_KEY") ??

throw new ArgumentException("Missing SPEECH_KEY");

var speechRegion = Environment.GetEnvironmentVariable("AZURE_SPEECH_REGION") ??

throw new ArgumentException("Missing SPEECH_REGION");

Next, the clients are initialized. In the Speech SDK we can choose one of the many predefined voices; in our case we’ll go with en-GB-SoniaNeural for no other reason than her pleasant British accent.

var speechConfig = SpeechConfig.FromSubscription(speechKey, speechRegion);

speechConfig.SpeechSynthesisVoiceName = "en-GB-SoniaNeural";

var audioOutputConfig = AudioConfig.FromDefaultSpeakerOutput();

using var speechSynthesizer = new SpeechSynthesizer(speechConfig, audioOutputConfig);

var client = new OpenAIClient(new Uri(azureOpenAiServiceEndpoint), new AzureKeyCredential(azureOpenAiServiceKey));

Once the clients are bootstrapped, we can send the request to Azure OpenAI, providing the article list we’d like to have summarized. The prompt instruction for the model defines how we expect the response in the form that will be suitable to be converted to spoken audio. For example, we’d like to avoid inclusion of bullet points or other characters that are not typically used in spoken language.

var client = new OpenAIClient(new Uri(azureOpenAiServiceEndpoint), new AzureKeyCredential(azureOpenAiServiceKey));

var systemPrompt = """

You are a summarization engine for ArXiv papers.

You will take in input in the form of paper title and abstract,

and summarize them in a digestible 2-3 sentence format.

Your output is going to stylistically resemble speech

- that is, do not include any bullet points,

numbering or other characters not appearing in spoken language.

Each summary should be a simple, plain text, separate paragraph.

""";

var completionsOptions = new ChatCompletionsOptions

{

Temperature = 0,

NucleusSamplingFactor = 1,

FrequencyPenalty = 0,

PresencePenalty = 0,

MaxTokens = 800,

DeploymentName = azureOpenAiDeploymentName,

Messages =

{

new ChatRequestSystemMessage(systemPrompt),

new ChatRequestUserMessage(prompt)

}

};

var responseStream = await client.GetChatCompletionsStreamingAsync(completionsOptions);

By using the response stream from the service, we can analyze the chunks returned by Azure OpenAI, and as soon as we encounter a reasonable break in text, such as new line or a full stop, we will send this off to AI Speech API. Thanks to this approach, the user does not have to wait for the whole summary to be streamed down before it gets read out loud, but instead the audio synthesis and playback can happen on-the-fly.

We can achieve the combined step of speech synthesis and an immediate audio playback by using SpeakTextAsync method on the AI Speech client.

AnsiConsole.Write(new Rule("[green]Summary of the articles[/]"));

var gptBuffer = new StringBuilder();

await foreach (var completionUpdate in responseStream)

{

var message = completionUpdate.ContentUpdate;

if (string.IsNullOrEmpty(message))

{

continue;

}

AnsiConsole.Write(message);

gptBuffer.Append(message);

// synthesize speech when encountering sentence breaks

if (message.Contains("\n") || message.Contains("."))

{

var sentence = gptBuffer.ToString().Trim();

if (!string.IsNullOrEmpty(sentence))

{

_ = await speechSynthesizer.SpeakTextAsync(sentence);

gptBuffer.Clear();

}

}

}

And that’s it!

Trying it out 🔗

We are now ready to run our application. For testing we can use the date 20240301, on which there are three papers on ArXiv with “quantum computing” in their titles.

dotnet run 20240301

The output of the program should be the tabular list of all retrieved articles, as well as their summary below. The summary is being streamed to the console output so appears progressively. After each sentence, the speech audio gets synthesized and played out.

As you can see the summaries are pretty interesting, and - though obviously not visible on the screenshot - they were accompanied by the speech output, providing a nice “virtual assistant” type of experience.

The source code for this post is, as always, available on GitHub.