In this post, we will explore the flexibility behind Azure AI Inference, a new library from Azure, which allows us to run inference against a wide range of AI model deployments - both in Azure and, as we will see in this notebook, in other places as well.

It is available for Python and for .NET - in this post, we will focus on the Python version.

Setting the stage 🔗

To begin with, we need to install the azure.ai.inference and the python-dotenv packages.

from azure.ai.inference import ChatCompletionsClient

from azure.core.credentials import AzureKeyCredential

from dotenv import load_dotenv

import os

load_dotenv()

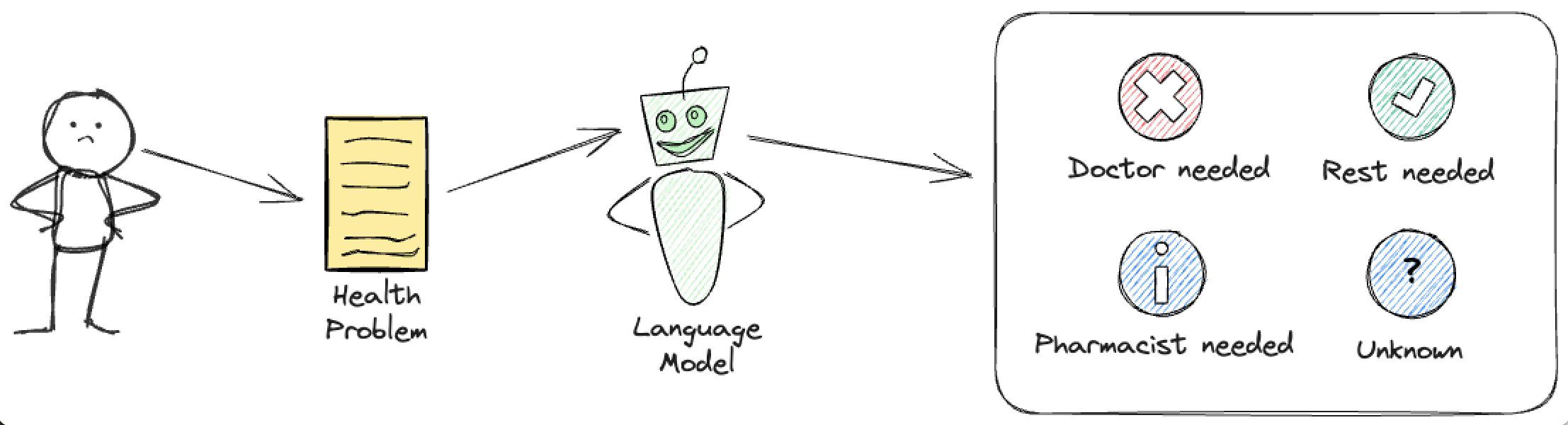

Next, we are going to define a general task for our models. It will be a sample health problem classification, where the model will be asked to categorize user’s input into one of four possible classes:

- doctor_required - if the user should see a doctor immediately

- pharmacist_required - if the user should see a pharmacist - for problems that can be solved with over-the-counter drugs

- rest_required - if the user should rest and does not need professional help

- unknown - if the model is not sure about the classification

instruction = """You are a medical classification engine for health conditions. Classify the prompt into into one of the following possible treatment options: 'doctor_required' (serious condition), 'pharmacist_required' (light condition) or 'rest_required' (general tiredness). If you cannot classify the prompt, output 'unknown'.

Only respond with the single word classification. Do not produce any additional output.

# Examples:

User: "I did not sleep well." Assistant: "rest_required"

User: "I chopped off my arm." Assistant: "doctor_required"

# Task

User:

"""

We then need a set of sample inputs to the model, and the expected outputs. Those are shown below.

user_inputs = [

"I'm tired.", # rest_required

"I'm bleeding from my eyes.", # doctor_required

"I have a headache." # pharmacist_required

]

The inference code is very simple - we will call the complete method on the inference client, and indicate that we are interested in the streaming of the response. This way, we can process the response as it comes in, and not wait for the whole response to be ready. We iterate through all the available user inputs, and print the model’s response for each of them.

def run_inference():

for user_input in user_inputs:

messages = [{

"role": "user",

"content": f"{instruction}{user_input} Assistant: "

}]

print(f"{user_input} -> ", end="")

stream = client.complete(

messages=messages,

stream=True

)

for chunk in stream:

if chunk.choices and chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="")

print()

We are going to leverage this shared inference code across different models and endpoints, to show how easy it is to switch between them.

Azure OpenAI 🔗

The first example will show using the inference client against an Azure OpenAI endpoint. In this case, three arguments are mandatory:

- an endpoint URL in the form of https://{resouce-name}.openai.azure.com/openai/deployments/{deployment-name}

- the credential to access it (could be either the key or the integrated Azure SDK authentication)

- the API version (this is mandatory in Azure OpenAI API access)

In my case, I am going to use gpt-4o-mini model:

AZURE_OPENAI_RESOURCE = os.environ["AZURE_OPENAI_RESOURCE"]

AZURE_OPENAI_KEY = os.environ["AZURE_OPENAI_KEY"]

client = ChatCompletionsClient(

endpoint=f"https://{AZURE_OPENAI_RESOURCE}.openai.azure.com/openai/deployments/gpt-4o-mini/",

credential=AzureKeyCredential(AZURE_OPENAI_KEY),

api_version="2024-06-01",

)

print(" * AZURE OPENAI INFERENCE * ")

run_inference()

If we run this example, the output will looks as follows:

* AZURE OPENAI INFERENCE *

I'm tired. -> rest_required

I'm bleeding from my eyes. -> doctor_required

I have a headache. -> pharmacist_required

Github Models 🔗

The second example will show how to use the inference client against a model hosted in Github Models. In order to make that, work, a Personal Access Token for Github is needed - the token does not need to have any permissions.

In our case, we expect that the token is available in the env variable GITHUB_TOKEN.

GITHUB_TOKEN = os.environ["GITHUB_TOKEN"]

With Github Models, we can easily choose from a huge range of models, without having to deploy anything - so let’s try running our task against Llama-3.2-11B-Vision-Instruct.

client = ChatCompletionsClient(

endpoint="https://models.inference.ai.azure.com",

credential=AzureKeyCredential(GITHUB_TOKEN),

model="Llama-3.2-11B-Vision-Instruct"

)

print(" * GITHUB MODELS INFERENCE * ")

run_inference()

If we run this example, the output will looks as follows:

* GITHUB MODELS INFERENCE *

I'm tired. -> rest_required

I'm bleeding from my eyes. -> doctor_required

I have a headache. -> pharmacist_required

Azure AI deployments 🔗

The next example shows using the client against Azure AI model deployment. The prerequisite here is to have a model deployed as Serverless API or as a Managed Compute endpoint - the relevant instructions can be found here.

The two pieces of information needed to connect to such model are:

- an endpoint URL in the form of https://{deployment-name}.{region}.models.ai.azure.com

- the credential to access it (could be either the key or the integrated Azure SDK authentication)

In our case we will read that information from the environment variables below, with the endpoint being explicitly split into the region and the deployment name. In my code I am using AI21-Jamba-1-5-Mini model, although that is not evident from the code - as the model name is not needed in the code, only the endpoint.

AZURE_AI_REGION = os.environ["AZURE_AI_REGION"]

AZURE_AI_DEPLOYMENT_NAME = os.environ["AZURE_AI_DEPLOYMENT_NAME"]

AZURE_AI_KEY = os.environ["AZURE_AI_KEY"]

client = ChatCompletionsClient(

endpoint=f"https://{AZURE_AI_DEPLOYMENT_NAME}.{AZURE_AI_REGION}.models.ai.azure.com",

credential=AzureKeyCredential(AZURE_AI_KEY)

)

print(" * AZURE AI INFERENCE * ")

run_inference()

If we run this example, the output will looks as follows:

* AZURE AI INFERENCE *

I'm tired. -> rest_required.

I'm bleeding from my eyes. -> doctor_required.

I have a headache. -> pharmacist_required.

OpenAI Inference 🔗

Despite the fact that the library has “Azure” in its name, it is not restricted to models running in Azure (or Github, which is part of Microsoft). It can be used with any other model that is reachable over an HTTP compatible compatible with OpenAI API. This of course includes OpenAI itself.

The next example shows, by just using the OpenAI API key, how to connect to the OpenAI model - in our case, the gpt-4o-mini.

OPENAI_KEY = os.environ["OPENAI_KEY"]

client = ChatCompletionsClient(

endpoint="https://api.openai.com/v1",

credential=AzureKeyCredential(OPENAI_KEY),

model="gpt-4o-mini"

)

print(" * AZURE OPENAI INFERENCE * ")

run_inference()

The output here is, unsurprisingly, the same as before:

* OPENAI INFERENCE *

I'm tired. -> rest_required

I'm bleeding from my eyes. -> doctor_required

I have a headache. -> pharmacist_required

Local LLM 🔗

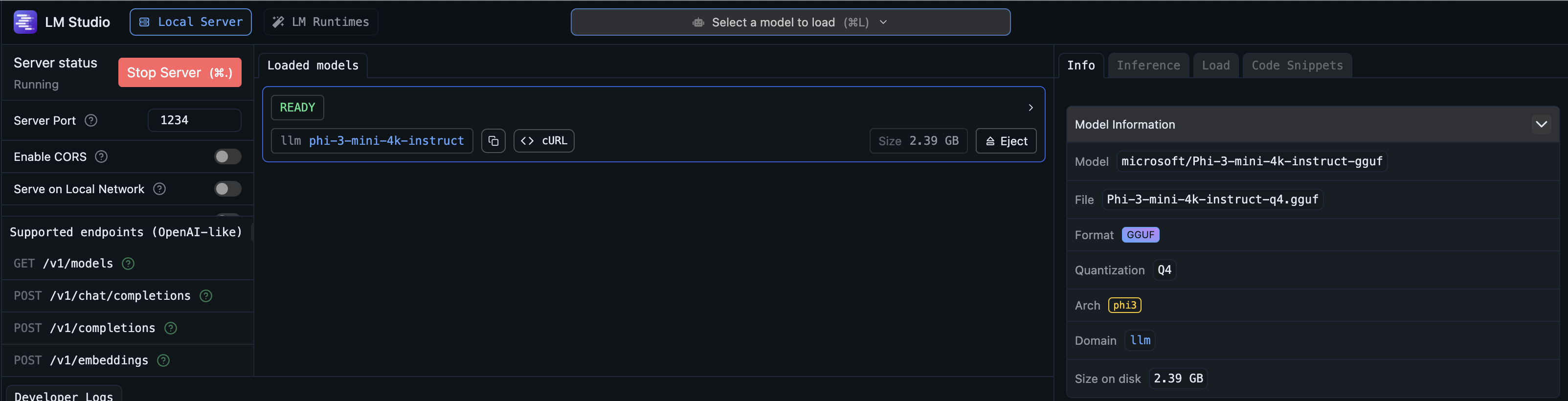

The final example bootstraps a ChatCompletionsClient pointing at the local completion server from LM Studio. In this case, we do not need to supply the credentials as the server is running locally and we can access it without authentication.

In my case, I configured LM Studio to use phi-3-mini-4k-instruct.

client = ChatCompletionsClient(

endpoint="http://localhost:1234/v1",

credential=AzureKeyCredential("")

)

print(" * LOCAL LM STUDIO SERVER INFERENCE * ")

run_inference()

And the output is, of course:

* LOCAL LM STUDIO SERVER INFERENCE *

I'm tired. -> rest_required

I'm bleeding from my eyes. -> doctor_required

I have a headache. -> pharmacist_required

Summary 🔗

Azure AI Inference is a powerful library that allows you to access a wide range of models, regardless of where they are deployed. In this post, we have shown how to use it with Azure OpenAI, Github Models, Azure AI deployments, OpenAI, and a local LLM server. The inference code remains the same, regardless of the model, which makes it very easy to switch between different models and endpoints. This applies to both the Python version, which we explored in this post, and the .NET version.

If you would like to play around with this code further, you can find the Jupyter notebook here.