Last time around, we discussed how Large Language Models can select the appropriate tool and its required parameters out of freely flowing conversation text. We also introduced the formal concept of those tools, which are structurally described using an OpenAPI schema.

In this part 2 of the series, we are going to build two different .NET command line assistant applications, both taking advantage of the tool calling integration. We will orchestrate everything by hand - that is, we will only use the Azure OpenAI Service API directly (or rather using the .NET SDK for Azure OpenAI) - without any additional AI frameworks.

Series overview 🔗

- Part 1: The Basics

- Part 2 (this part): Using the tools directly via the SDK

An arXiv tool orchestration sample 🔗

We have previously covered many different aspects of Azure OpenAI Service on this blog, so I will not dive into the basics of getting started, and jump straight to the core topic. Almost all the demos that we used here previously were related to using arXiv, so let us stay within that theme for now.

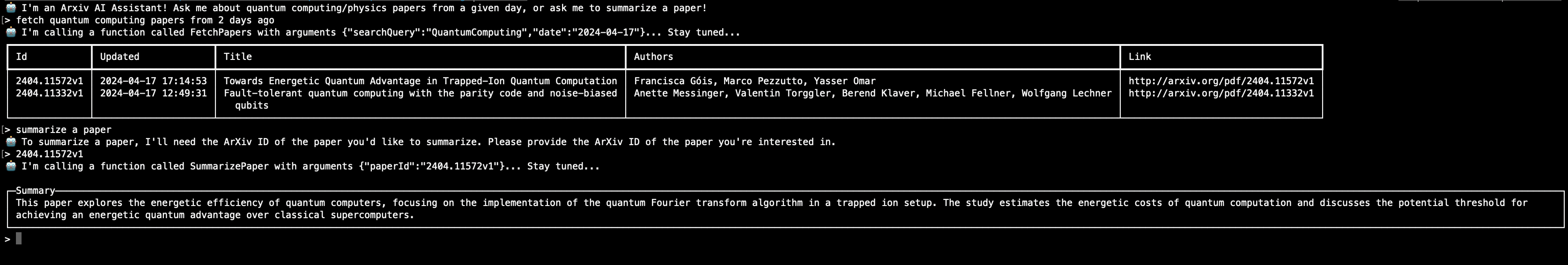

To demonstrate the integration of tool calling, we will build a command line AI Assistant that can access two tools - one that will fetch papers from arXiv and display them, the other that will summarize a paper. With that, the assistant will offer an interactive way to browse arXiv in a conversational format.

I assume it is already clear how to set up the Azure OpenAI service, create a deployment and obtain the key to call it. For the purpose of this demo a GPT 3.5 model is enough. Let’s assume that the application already has access, via pre-configured environment variables, to all the metadata required to communicate with Azure OpenAI. To run the code later we will of course need to make sure that these variables are set correctly pointing to our Azure backend:

var azureOpenAiServiceEndpoint = Environment.GetEnvironmentVariable("AZURE_OPENAI_SERVICE_ENDPOINT")

?? throw new Exception("AZURE_OPENAI_SERVICE_ENDPOINT missing");

var azureOpenAiServiceKey = Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY") ??

throw new Exception("AZURE_OPENAI_API_KEY missing");

var azureOpenAiDeploymentName = Environment.GetEnvironmentVariable("AZURE_OPENAI_DEPLOYMENT_NAME") ??

throw new Exception("AZURE_OPENAI_DEPLOYMENT_NAME missing");

Next, let’s define an arXiv client capable of performing the two tasks that we mentioned before. The two methods the client exposes are FetchPapers, based on a category and date, and SummarizePaper, based on the paper ID. They will be surfaced to our AI Assistant as (function) tools.

class ArxivClient

{

private readonly OpenAIClient _client;

private readonly string _azureOpenAiDeploymentName;

public ArxivClient(OpenAIClient client, string azureOpenAiDeploymentName)

{

_client = client;

_azureOpenAiDeploymentName = azureOpenAiDeploymentName;

}

public async Task<Feed> FetchPapers(SearchQuery searchQuery, DateTime date)

{

var query = searchQuery == SearchQuery.QuantumPhysics ? "cat:quant-ph" : "ti:\"quantum computing\"";

var feed = await ArxivHelper.FetchArticles(query, date.ToString("yyyyMMdd"));

return feed;

}

public async Task<string> SummarizePaper(string paperId)

{

var feed = await ArxivHelper.FetchArticleById(paperId);

if (feed == null || feed.Entries.Count == 0)

{

return "Paper not found";

}

if (feed.Entries.Count > 1)

{

return "More than one match for this ID!";

}

var prompt = $"Title: {feed.Entries[0].Title}{Environment.NewLine}Abstract: {feed.Entries[0].Summary}";

var systemPrompt = """

You are a summarization engine for ArXiv papers. You will take in input in the form of paper title and abstract, and summarize them in a digestible 1-2 sentence format.

Each summary should be a simple, plain text, separate paragraph.

""";

var completionsOptions = new ChatCompletionsOptions

{

Temperature = 0,

FrequencyPenalty = 0,

PresencePenalty = 0,

MaxTokens = 400,

DeploymentName = _azureOpenAiDeploymentName,

Messages =

{

new ChatRequestSystemMessage(systemPrompt),

new ChatRequestUserMessage(prompt)

}

};

var completionsResponse = await _client.GetChatCompletionsAsync(completionsOptions);

if (completionsResponse.Value.Choices.Count == 0)

{

return "No response available";

}

var preferredChoice = completionsResponse.Value.Choices[0];

return preferredChoice.Message.Content.Trim();

}

}

enum SearchQuery

{

QuantumPhysics,

QuantumComputing

}

The fetch functionality is very simple, and relies on the ArxivHelper which can access the RSS feed of arXiv, and which we already discussed in depth a while ago.

On the other hand, the summarization function is more interesting - it utilizes Azure OpenAI Service - in this case, however, this is disconnected from our main assistant, and is a simple “self-contained” call to the GPT model running in the cloud. This is a common pattern, as it allows using different models for different tasks, as well as creating workflows where one model decides to, de facto, call another model.

Next, we will create an ExecutionHelper, which will act as a bridge between the main assistant code, and the arXiv client that we just wrote. It will have two primary tasks. Firstly, it will format the available functions into the OpenAPI schema that is required by Azure OpenAI. Secondly, it will take care of the reverse process - accept the raw string name of the function selected by the model, as well as its JSON stringified arguments, and convert them into a local function invocation.

class ExecutionHelper

{

private readonly ArxivClient _arxivClient;

public ExecutionHelper(ArxivClient arxivClient)

{

_arxivClient = arxivClient;

}

public List<FunctionDefinition> GetAvailableFunctions()

=> new()

{

new FunctionDefinition

{

Description =

"Fetches quantum physics or quantum computing papers from ArXiv for a given date",

Name = nameof(ArxivClient.FetchPapers),

Parameters = BinaryData.FromObjectAsJson(new

{

type = "object",

properties = new

{

searchQuery =

new

{

type = "string",

@enum = new[] { "QuantumPhysics", "QuantumComputing" }

},

date = new { type = "string", format = "date" }

},

required = new[] { "searchQuery", "date" }

})

},

new FunctionDefinition

{

Description = "Summarizes a given paper based on the ArXiv ID of the paper.",

Name = nameof(ArxivClient.SummarizePaper),

Parameters = BinaryData.FromObjectAsJson(new

{

type = "object",

properties = new { paperId = new { type = "string" }, },

required = new[] { "paperId" }

})

}

};

public async Task<bool> InvokeFunction(string functionName, string functionArguments)

{

try

{

if (functionName == nameof(ArxivClient.FetchPapers))

{

var doc = JsonDocument.Parse(functionArguments);

var root = doc.RootElement;

var searchQueryString = root.GetProperty("searchQuery").GetString();

var searchQuery = Enum.Parse<SearchQuery>(searchQueryString);

var date = root.GetProperty("date").GetDateTime();

var feed = await _arxivClient.FetchPapers(searchQuery, date);

WriteOutItems(feed.Entries);

return true;

}

if (functionName == nameof(ArxivClient.SummarizePaper))

{

var doc = JsonDocument.Parse(functionArguments);

var root = doc.RootElement;

var paperId = root.GetProperty("paperId").GetString();

var summary = await _arxivClient.SummarizePaper(paperId);

Console.WriteLine();

var panel = new Panel(summary) { Header = new PanelHeader("Summary") };

AnsiConsole.Write(panel);

return true;

}

}

catch (Exception e)

{

AnsiConsole.WriteException(e);

// in case of any error, send back to user in error state

}

return false;

}

private void WriteOutItems(List<Entry> entries)

{

if (entries.Count == 0)

{

Console.WriteLine("No items to show...");

return;

}

var table = new Table

{

Border = TableBorder.HeavyHead

};

table.AddColumn("Id");

table.AddColumn("Updated");

table.AddColumn("Title");

table.AddColumn("Authors");

table.AddColumn("Link");

foreach (var entry in entries)

{

table.AddRow(

$"{Markup.Escape(entry.Id)}",

$"{Markup.Escape(entry.Updated.ToString("yyyy-MM-dd HH:mm:ss"))}",

$"{Markup.Escape(entry.Title)}",

$"{Markup.Escape(string.Join(", ", entry.Authors.Select(x => x.Name).ToArray()))}",

$"[link={entry.PdfLink}]{entry.PdfLink}[/]"

);

}

AnsiConsole.Write(table);

}

}

The ExecutionHelper exposes all of the available functions via GetAvailableFunctions method, while the reverse process is facilitate by InvokeFunction. There, we need to match the function by name, and deserialize its arguments from the JSON format which is used by the model (recall that from the discussion in the part 1 of this series). The presentation of data (be it the list of papers retrieved from arXiv, or the summary of a single paper) is handled within the private WriteOutItems method, where we once again rely on Spectre.Console for the nice console UX purposes.

The remaining part of the work is to write an interactive loop for our assistant, and wire all the pieces together. Let’s start by initializing all the necessary classes and defining the prompt:

var systemInstructions = $"""

You are an AI assistant designed to support users in navigating the ArXiv browser application, focusing on functions related to quantum physics and quantum computing research.

The application features specific functions that allow users to fetch papers and summarize them based on precise criteria. Adhere to the following rules rigorously:

1. **Direct Parameter Requirement:**

When a user requests an action, directly related to the functions, you must never infer or generate parameter values, especially paper IDs, on your own.

If a parameter is needed for a function call and the user has not provided it, you must explicitly ask the user to provide this specific information.

2. **Mandatory Explicit Parameters:**

For the function `SummarizePaper`, the `paperId` parameter is mandatory and must be provided explicitly by the user.

If a user asks for a paper summary without providing a `paperId`, you must ask the user to provide the paper ID.

3. **Avoid Assumptions:**

Do not make assumptions about parameter values.

If the user's request lacks clarity or omits necessary details for function execution, you are required to ask follow-up questions to clarify parameter values.

4. **User Clarification:**

If a user's request is ambiguous or incomplete, you should not proceed with function invocation.

Instead, ask for the missing information to ensure the function can be executed accurately and effectively.

5. **Grounding in Time:**

Today is {DateTime.Now.ToString("D")}. When the user asks about papers from today, you will use that date.

Yesterday was {DateTime.Now.AddDays(-1).ToString("D")}. You will correctly infer past dates.

Tomorrow will be {DateTime.Now.AddDays(1).ToString("D")}. You will ignore requests for papers from the future.

""";

var introMessage =

"I'm an Arxiv AI Assistant! Ask me about quantum computing/physics papers from a given day, or ask me to summarize a paper!";

AnsiConsole.MarkupLine($":robot: {introMessage}");

var openAiClient = new OpenAIClient(new Uri(azureOpenAiServiceEndpoint),

new AzureKeyCredential(azureOpenAiServiceKey));

var arxivClient = new ArxivClient(openAiClient, azureOpenAiDeploymentName);

var executionHelper = new ExecutionHelper(arxivClient);

var messageHistory = new List<ChatRequestMessage> {

new ChatRequestSystemMessage(systemInstructions),

new ChatRequestAssistantMessage(introMessage)

};

Since the assistant needs to be aware of dates, part of the system prompts ensures that time grounding is performed - the assistant gets explicit instruction what date was yesterday, what date is today, and what will be tomorrow (this can be surprisingly tricky with LLMs!). As it’s always the case, prompt engineering takes a lot of trial and error, especially when we would like to ensure that the model does not hallucinate the values for function parameters. Therefore the messaging is quite strict, and we instruct the model to avoid any assumptions.

We also initialize the OpenAIClient and the two helpers we just wrote - ArxivClient and ExecutionHelper. The initial welcome message is also printed out, output to the console and ensure everything is correctly stored in the interaction history.

Finally, we follow with the interaction loop. The prompt is read from console input, converted into a request, enriched with the available tools (functions) and then submitted to the Azure OpenAI Service with temperature set to 0.

while (true)

{

Console.Write("> ");

var prompt = Console.ReadLine();

messageHistory.Add(new ChatRequestUserMessage(prompt));

var request = new ChatCompletionsOptions(azureOpenAiDeploymentName, messageHistory)

{

Temperature = 0,

MaxTokens = 400

};

foreach (var function in executionHelper.GetAvailableFunctions())

{

request.Tools.Add(new ChatCompletionsFunctionToolDefinition(function));

}

var completionResponse = await openAiClient.GetChatCompletionsStreamingAsync(request);

AnsiConsole.Markup(":robot: ");

var modelResponse = new StringBuilder();

var functionNames = new Dictionary<int, string>();

var functionArguments = new Dictionary<int, StringBuilder>();

await foreach (var message in completionResponse)

{

if (message.ToolCallUpdate is StreamingFunctionToolCallUpdate functionToolCallUpdate)

{

if (functionToolCallUpdate.Name != null)

{

functionNames[functionToolCallUpdate.ToolCallIndex] = functionToolCallUpdate.Name;

}

if (functionToolCallUpdate.ArgumentsUpdate != null)

{

if (!functionArguments.TryGetValue(functionToolCallUpdate.ToolCallIndex, out var argumentsBuilder))

{

argumentsBuilder = new StringBuilder();

functionArguments[functionToolCallUpdate.ToolCallIndex] = argumentsBuilder;

}

argumentsBuilder.Append(functionToolCallUpdate.ArgumentsUpdate);

}

}

if (message.ContentUpdate != null)

{

modelResponse.Append(message.ContentUpdate);

AnsiConsole.Write(message.ContentUpdate);

}

}

var modelResponseText = modelResponse.ToString();

var assistantResponse = new ChatRequestAssistantMessage(modelResponseText);

// call the first tool that was found

// in more sophisticated scenarios we may want to call multiple tools or let the user select one

var functionCall = functionNames.FirstOrDefault().Value;

var functionArgs = functionArguments.FirstOrDefault().Value?.ToString();

if (functionCall != null && functionArgs != null)

{

var toolCallId = Guid.NewGuid().ToString();

assistantResponse.ToolCalls.Add(new ChatCompletionsFunctionToolCall(toolCallId, functionCall, functionArgs));

AnsiConsole.WriteLine($"I'm calling a function called {functionCall} with arguments {functionArgs}... Stay tuned...");

var functionResult = await executionHelper.InvokeFunction(functionCall, functionArgs.ToString());

var toolOutput = new ChatRequestToolMessage(functionResult ? "Success" : "Failure", toolCallId);

messageHistory.Add(assistantResponse);

messageHistory.Add(toolOutput);

if (!functionResult)

{

AnsiConsole.WriteLine("There was an error exeucting a function!");

}

continue;

}

messageHistory.Add(assistantResponse);

Console.WriteLine();

}

As the model streams the response, we have to make sure that we capture both the text output of the model, as well as the selected tool calls. This is a bit clunky because due to the usage of streaming, the data has to be accumulated across various response chunks. The same of course applies to any other content that the model returns, though in this case we can print it out as its being sent. At the end of the stream loop, we should have the entire set of tools accumulated. In most cases there would be no tools returned by the model, but of course if it decides that a a given tool (function) is appropriate to be invoked in a given context, we’d typically get one option in the response. It is technically possible that the model selects multiple tools as well, at which point we would have to define a strategy of handling that (invoke all or ask the user what to do), though we ignore that case in this example for the sake of simplicity.

Once a function call has been indicated by the model, we should have both the function name and its arguments in a JSON string. We can leverage our InvokeFunction function, from the ExecutionHelper to perform the invocation of the function.

One thing that is important when working with tools and tool calls, is to make sure that we provide enough contextual feedback to the model about the tools when maintaining the interaction history. This is why we add both the tool calls to the conversation history, as well as the results of those tool calls - so that the model can utlize that in subsequent interactions. In our case the output of the functions - so the presentation of the papers or the summaries - is not relevant for the model, so we only add the success or failure message to the history.

With this in place, we are ready to test the application. Below is the screenshot from a sample conversation - notice how the model is aware of the dates, and how it correctly infers the function calls based on the user input:

Concert booking sample 🔗

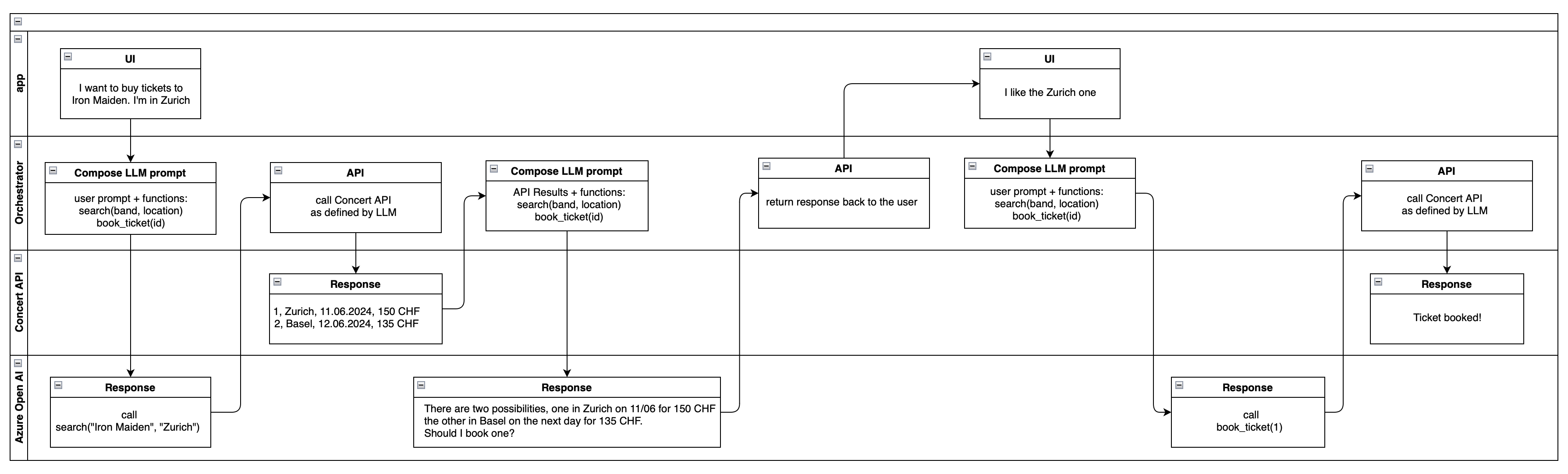

The previous example was interesting - but was also somewhat limited. The tool usage was unidirectional - that is, the model was selecting which tool to call, and the application was executing it. In a more complex scenario, we might want to have the model orchestrate a more complex workflow, where the tool output is not presented directly to the user, but instead used by the model to make further decisions.

This is effectively what we described in the example from part 1. Here is that workflow again:

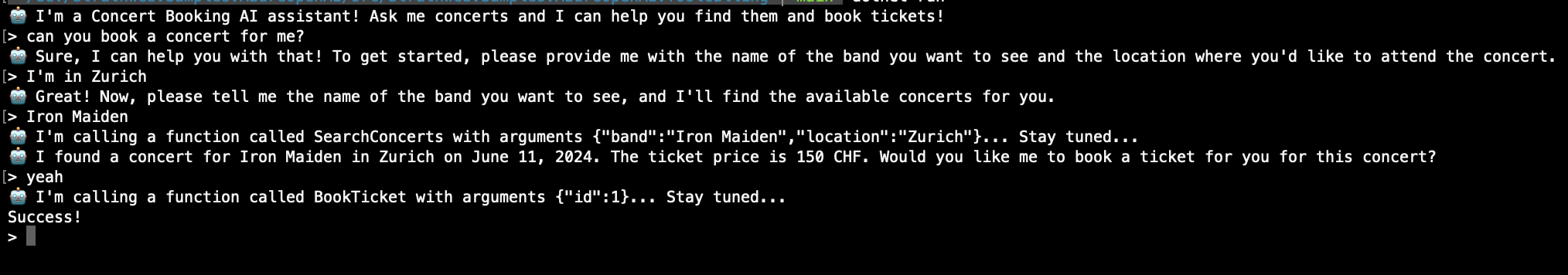

Let’s now use the code from the earlier arXiv sample, and reimplement it as the concert booking scenario. We will need to create a new client, representing the concert API (with a new set of functions, of course), and then create the ExecutionHelper to be able to call those new tools.

The Concert API is shown below. This is just a simple mock, with some basic in-memory data, but it should give you an idea of how the functions could look like in a real-world scenario, where we’d of course be calling some external service.

public enum Location

{

Zurich,

Basel,

Toronto,

NewYork

}

public class ConcertApi

{

private List<Concert> Concerts = new()

{

new Concert(1, new DateTime(2024, 6, 11), "Iron Maiden", Location.Zurich, 150, "CHF"),

new Concert(2, new DateTime(2024, 6, 12), "Iron Maiden", Location.Basel, 135, "CHF"),

new Concert(3, new DateTime(2024, 8, 15), "Dropkick Murphys", Location.Toronto, 145, "CAD"),

new Concert(4, new DateTime(2025, 1, 11), "Green Day", Location.NewYork, 200, "USD"),

};

public Task<string> SearchConcerts(string band, Location location)

{

var matches = Concerts.Where(c =>

string.Equals(c.Band, band, StringComparison.InvariantCultureIgnoreCase) && c.Location == location).ToArray();

return Task.FromResult(JsonSerializer.Serialize(matches));

}

public Task BookTicket(uint id)

{

if (!Concerts.Any(c => c.Id == id))

{

throw new Exception("No such concert!");

}

// assume when no exception is thrown, booking succeeds

return Task.CompletedTask;

}

}

public record Concert(uint Id, DateTime TimeStamp, string Band, Location Location, double Price, string Currency);

The two exposed functions are SearchConcerts and BookTicket. The first one searches for concerts by band name and location, and the second one books a ticket to a concert based on its ID, as outlined by the flow chart we just saw.

The ExecutionHelper will be very similar to the one we used for the arXiv example, but with the functions adjusted to the new API. The GetAvailableFunctions method will now expose the two functions from the Concert API:

class ExecutionHelper

{

private readonly ConcertApi _concertApi;

public ExecutionHelper(ConcertApi concertApi)

{

_concertApi = concertApi;

}

public List<FunctionDefinition> GetAvailableFunctions()

=> new()

{

new FunctionDefinition

{

Description =

"Searches for concerts by a specific band name and location. Returns a list of concerts, each one with its ID, date, band, location, ticket prices and currency.",

Name = nameof(ConcertApi.SearchConcerts),

Parameters = BinaryData.FromObjectAsJson(new

{

type = "object",

properties = new

{

band = new { type = "string" },

location = new

{

type = "string",

@enum = new[] { "Zurich", "Basel", "Toronto", "NewYork" }

},

},

required = new[] { "band", "location" }

})

},

new FunctionDefinition

{

Description = "Books a concert ticket to a concert, using the concert's ID.",

Name = nameof(ConcertApi.BookTicket),

Parameters = BinaryData.FromObjectAsJson(new

{

type = "object",

properties = new { id = new { type = "integer" }, },

required = new[] { "id" }

})

}

};

public async Task<ToolResult> InvokeFunction(string functionName, string functionArguments)

{

try

{

if (functionName == nameof(ConcertApi.SearchConcerts))

{

var doc = JsonDocument.Parse(functionArguments);

var root = doc.RootElement;

var locationString = root.GetProperty("location").GetString();

var location = Enum.Parse<Location>(locationString);

var band = root.GetProperty("band").GetString();

var result = await _concertApi.SearchConcerts(band, location);

return new ToolResult(result, true);

}

if (functionName == nameof(ConcertApi.BookTicket))

{

var doc = JsonDocument.Parse(functionArguments);

var root = doc.RootElement;

var id = root.GetProperty("id").GetUInt32();

await _concertApi.BookTicket(id);

return new ToolResult("Success!");

}

}

catch (Exception e)

{

AnsiConsole.WriteException(e);

// in case of any error, send back to user in error state

}

return new ToolResult(null, IsError: true);

}

}

public record ToolResult(string Output, bool BackToModel = false, bool IsError = false);

The ExecutionHelper is doing mostly the same stuff as before, but now it is calling the Concert API instead of the arXiv API. The InvokeFunction method is responsible for calling the appropriate method on the Concert API, based on the function name and arguments provided by the model.

The main difference from the previous example is that we now have a ToolResult class, which is used to encapsulate the result of the tool call. This is important, as we will not only need to provide feedback to the model about the success or failure of the function call,but also include the result of the call itself, so that the model can act upon that. We also no longer present the output of the function to the user.

The bootstrapping code is mostly unchanged, naturally with the system instruction adjusted to the concert booking scenario:

var systemInstructions = $"""

You are an AI assistant designed to support users in searching and booking concert tickets. Adhere to the following rules rigorously:

1. **Direct Parameter Requirement:**

When a user requests an action, directly related to the functions, you must never infer or generate parameter values, especially IDs, band names or locations on your own.

If a parameter is needed for a function call and the user has not provided it, you must explicitly ask the user to provide this specific information.

2. **Avoid Assumptions:**

Do not make assumptions about parameter values.

If the user's request lacks clarity or omits necessary details for function execution, you are required to ask follow-up questions to clarify parameter values.

3. **User Clarification:**

If a user's request is ambiguous or incomplete, you should not proceed with function invocation.

Instead, ask for the missing information to ensure the function can be executed accurately and effectively.

4. **Grounding in Time:**

Today is {DateTime.Now.ToString("D")}

Yesterday was {DateTime.Now.AddDays(-1).ToString("D")}. You will correctly infer past dates.

Tomorrow will be {DateTime.Now.AddDays(1).ToString("D")}.

""";

var introMessage =

"I'm a Concert Booking AI assistant! Ask me concerts and I can help you find them and book tickets!";

AnsiConsole.MarkupLine($":robot: {introMessage}");

var openAiClient = new OpenAIClient(new Uri(azureOpenAiServiceEndpoint),

new AzureKeyCredential(azureOpenAiServiceKey));

var concertApi = new ConcertApi();

var executionHelper = new ExecutionHelper(concertApi);

var messageHistory = new List<ChatRequestMessage>

{

new ChatRequestSystemMessage(systemInstructions),

new ChatRequestAssistantMessage(introMessage)

};

The interaction loop will now contain one key difference from the previous example - we will now provide the tool outcome back to the model, so that it can act upon it. This is done by adding the ToolResult to the history, and then using the BackToModel flag to indicate that the result should be sent back to the model.

This also implies that the prompt from the user is no longer necessary on every iteration - the model will be able to continue the conversation based on the tool results. The user prompt is only needed when the model asks for more information or when there is nothing else to act on.

var skipUserPrompt = false;

while (true)

{

if (!skipUserPrompt)

{

Console.Write("> ");

var prompt = Console.ReadLine();

messageHistory.Add(new ChatRequestUserMessage(prompt));

}

var request = new ChatCompletionsOptions(azureOpenAiDeploymentName, messageHistory)

{

Temperature = 0,

MaxTokens = 400

};

foreach (var function in executionHelper.GetAvailableFunctions())

{

request.Tools.Add(new ChatCompletionsFunctionToolDefinition(function));

}

var completionResponse = await openAiClient.GetChatCompletionsStreamingAsync(request);

AnsiConsole.Markup(":robot: ");

var modelResponse = new StringBuilder();

var functionNames = new Dictionary<int, string>();

var functionArguments = new Dictionary<int, StringBuilder>();

await foreach (var message in completionResponse)

{

if (message.ToolCallUpdate is StreamingFunctionToolCallUpdate functionToolCallUpdate)

{

if (functionToolCallUpdate.Name != null)

{

functionNames[functionToolCallUpdate.ToolCallIndex] = functionToolCallUpdate.Name;

}

if (functionToolCallUpdate.ArgumentsUpdate != null)

{

if (!functionArguments.TryGetValue(functionToolCallUpdate.ToolCallIndex, out var argumentsBuilder))

{

argumentsBuilder = new StringBuilder();

functionArguments[functionToolCallUpdate.ToolCallIndex] = argumentsBuilder;

}

argumentsBuilder.Append(functionToolCallUpdate.ArgumentsUpdate);

}

}

if (message.ContentUpdate != null)

{

modelResponse.Append(message.ContentUpdate);

AnsiConsole.Write(message.ContentUpdate);

}

}

var modelResponseText = modelResponse.ToString();

var assistantResponse = new ChatRequestAssistantMessage(modelResponseText);

// call the first tool that was found

// in more sophisticated scenarios we may want to call multiple tools or let the user select one

var functionCall = functionNames.FirstOrDefault().Value;

var functionArgs = functionArguments.FirstOrDefault().Value?.ToString();

if (functionCall != null && functionArgs != null)

{

var toolCallId = Guid.NewGuid().ToString();

assistantResponse.ToolCalls.Add(new ChatCompletionsFunctionToolCall(toolCallId, functionCall, functionArgs));

AnsiConsole.WriteLine($"I'm calling a function called {functionCall} with arguments {functionArgs}... Stay tuned...");

var functionResult = await executionHelper.InvokeFunction(functionCall, functionArgs);

var toolOutput = new ChatRequestToolMessage(functionResult.Output, toolCallId);

messageHistory.Add(assistantResponse);

messageHistory.Add(toolOutput);

if (functionResult.BackToModel)

{

skipUserPrompt = true;

}

else

{

Console.WriteLine(functionResult.Output);

}

continue;

}

messageHistory.Add(assistantResponse);

skipUserPrompt = false;

Console.WriteLine();

}

The core difference is in the second part of the code - when we receive the tool result, we add it to the history, and then check if the BackToModel flag is set. If it is, we skip the user prompt, and let the model continue the conversation based on the tool result. If the flag is not set, we print the output to the console, and then proceed with the next iteration.

This is really everything we need to implement this sample, and it should be ready for testing. Below is a screenshot from a sample conversation - note how the model is able to guide the user through the process of searching for a concert, and then booking a ticket:

Conclusion 🔗

In this part 2 of the series on tool calling we have shown how to build two different command line AI Assistants, both taking advantage of the tool calling integration. We have orchestrated everything by hand, using the Azure OpenAI Service API directly, without any additional AI frameworks.

Tool calling is a very powerful feature, and can be used to build complex workflows where the model can choose to invoke various tools, and act upon their results. This can be used to build very sophisticated conversational applications, where the model can guide the user through complex processes, and provide feedback based on the results of the tool calls.

As always, the source code for this blog post is available on GitHub.